Description

Our demonstration will show an example LHC physics analysis, which makes use of several software and hardware components of the next-generation Data Grid being developed to support the work of scientists resident in many world regions who are working on LHC. Specifically, we will make use of a Web services portal architecture called Clarens, developed at the California Institute of Technology (Caltech) for scientific data and services. The Clarens dataserver includes Grid-based authentication and services for a range of clients that include server-class systems, through personal desktops, laptops, to handheld PDA devices.

The demonstration involves an analysis tool called ROOT

as a Grid-authenticated Clarens client, an XML-based 3D detector geometry

viewer (COJAC), and an agent-based Grid monitoring system (MonaLISA). Physics

event collections, aggregated into large disk-resident files, will be

replicated across wide area networks from Clarens servers situated in

Grid

Computing for LHC Experiments

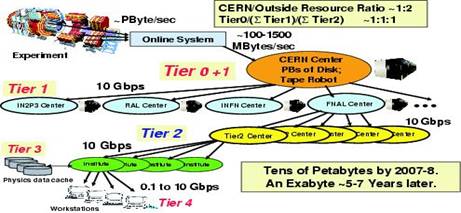

The Grid is an ideal metaphor for the distributed computing challenges posed by experiments at CERN�s LHC. Enormous data volumes (many Petabytes, or millions of Gigabytes) are expected to rapidly accumulate when the LHC begins operating. Processing all the data centrally at CERN is neither feasible nor desirable. Instead the task must be distributed among collaborating institutes worldwide, so enabling the massive aggregate capacities of those distributed facilities to be brought to bear. The distribution of data between the host laboratory and those institutes is not one-way: large quantities of simulation data and analysis results need to return to CERN and the other institutes as demand dictates. We plan for a highly dynamic, work-flow orientated system that will operate under severe global resource constraints. In our extensive systems modeling studies, it has been determined that a hierarchical Data Grid is required:

Hardware/Software/Networking

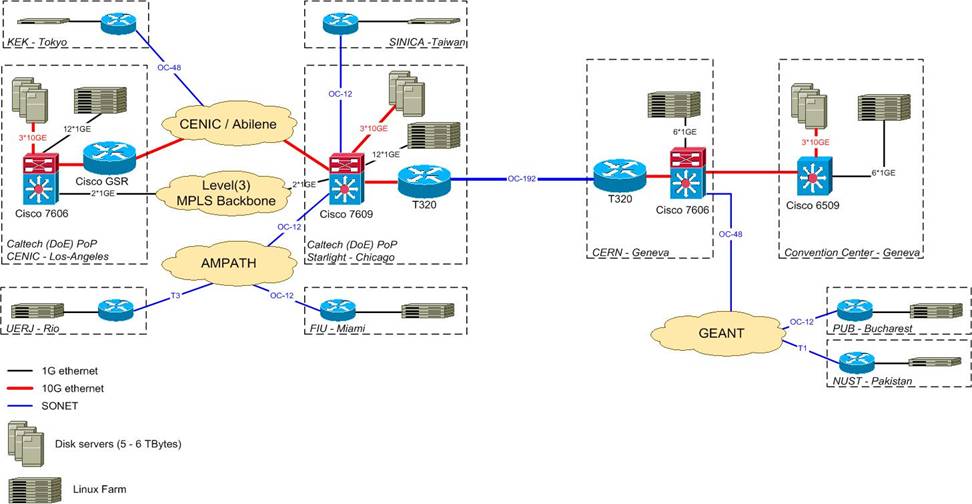

The networking setup we will use for the demonstrations

is shown on the reverse of this flyer. The client hardware on the show floor

will consist of a set of Itanium servers running 64-bit Linux, and equipped

with high speed network interconnects. Remotely, the demonstration will make

use of network and computing equipment situated in

Participants

|

Steven Low & Caltech FAST team Les Cottrell Saima Iqbal Chris Jones Olivier Martin Dan Nae |

Harvey Newman Sylvain Ravot� Suresh Singh� Conrad Steenberg� Frank van Lingen Yang Xia |

Support

We are grateful for the support of the companies and organizations whose

icons appear on the reverse of this flyer.